Key Resources

- Please refer to the OAC Handbook for additional details, examples, and suggested references

- Anthology (Campus Labs) - for Authorized Faculty to View and Enter Data

- Anthology (Campus Labs) Instructions for Entering Data

- CSLO Dashboards

Frequency of Assessment

At minimum, all learning outcomes must be assessed once every 6 years. However, OAC recommends assessing more frequently to track student success more closely, which helps inform effective teaching practices. We ask that you thoughtfully reflect and plan assessments in a way that will provide meaningful feedback for your department, given your specific needs. Some things to consider as you have these conversations:

- Level of course: introductory, major, capstone

- Frequency at which the course is offered

- Opportunities to collect and analyze data as a faculty group.

- If courses are assessed at least once every 3 years, outcomes data will be available when writing the department’s comprehensive program reviews (a 3-year cycle).

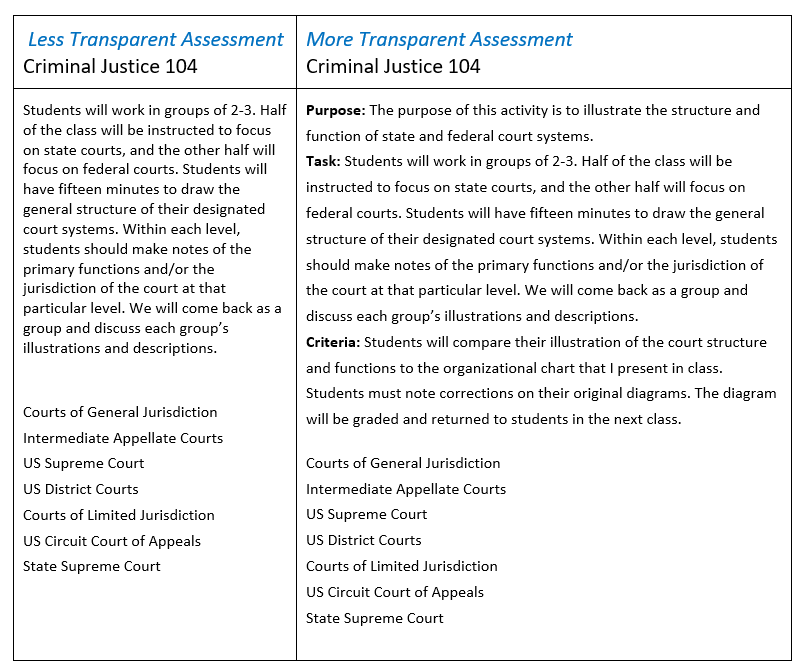

Designing transparent and equitable assessments

Equitable assessments create a level playing field for opportunity in the classroom; students are given an equal opportunity to succeed through clear and transparent assessment design and instruction. Assessments that are transparent and equitable contain these features:

- The purpose of assessment is clearly defined and related to skills/ knowledge that will be relevant beyond the course

- Task-How to do the assessment; steps to take, what to avoid

- Criteria - Students are given a rubric or checklist for success

- Options to demonstrate knowledge are equitable for all students.

(Source)

Here is the link to Example Assignments (more and less transparent) by discipline.

types of assessment

Direct vs. Indirect

Direct Assessments ask students to demonstrate their learning. This type of assessment is usually used in course instruction/Instructional Division.

Indirect- Students are not asked to directly demonstrate what they have learned. This type of assessment does not provide direct information about what students are able to represent, produce or demonstrate as a result of completing the course or the program. Indirect assessments, such as surveys, are an option but should be used to corroborate data gathered through a direct assessment. Indirect assessment is typically used in Student Services assessment.

Formative vs. Summative

Formative assessments provide students with feedback on their progress towards achieving a single outcome or a set of outcomes. Formative assessments can be related to specific content areas of the Course Outline of Record (COR). These assessments may be smaller in scope as part of a scaffolded design and are favored in research related to equitable assignments. (Source)

Summative assessments provide the instructor, the program, and the college information on student achievement of CSLOs, PSLOs, and/or Core Competencies for evaluation and continuous improvement of student learning. Some examples of summative assessments include portfolios, investigations/case studies, essay questions related to the discipline, observations, and journals.

Embedded Assessments

Embedded assessments are tasks integrated into courses and designed to collect specific information on course and/or program learning outcomes. These assessments are typically graded by course instructors and then pooled across sections to evaluate student learning at various scales. Embedded assessments are highly recommended, as they are easier to administer. (source)

Capstone courses and portfolios are often the best method of direct assessment for CTE programs. However, when there is no capstone course, and when completers are in varying levels of their academic journey at MiraCosta, an embedded assessment that is aligned with the course/program learning outcome is an effective method to assess a program.

Use Rubrics to effectively score assessments

Disciplines are encouraged to develop meaningful rubrics to ensure transparent assessment. For example, a standardized rubric may be created for an embedded assignment that links to a CSLO so that a consistent rubric can be used across course sections. Posting these rubrics for students to view as they are completing the assessment provides an important level of transparency.

Evaluate the Data

Data is evaluated within departments, led by key faculty and student services professionals involved with developing and teaching the learning outcomes.

Assessment data should be disaggregated by sub-populations of students and by modality (if appropriate). This is already occurring in our college-wide analysis of Core Competencies but has yet to be implemented for other spheres of assessment. The OAC is currently researching and evaluating systems that will enable broader data disaggregation at the course and program levels. As such systems are developed and implemented, the OAC will inform and train constituents.

The MiraCosta Core Competency dashboards provide a summary of college-wide data for each assessment that is disaggregated by a variety of parameters such as ethnicity, gender, age, and units completed. Although disaggregation is not currently possible with our CSLO or PSLO data, we can still thoughtfully and critically evaluate to the best of our abilities. Try to identify potential causes for the successes or failures of each outcome. The following questions may help get you started:

- How many students met the desired achievement levels?

- Achievement levels are set by discipline; are the set levels meaningful/appropriate?

- Were the students aware of the learning outcome and how it was connected to this specific assessment?

- Were the students able to practice a similar assignment (or related skills) in advance of the assessment?

- When was the assessment given in the semester? Would earlier or later evaluation be more appropriate?

- Was a rubric used to grade the assessment? If so, are there any revisions to add to the rubric? If not, would the development of rubric be helpful?

- Did the results follow expected patterns? (Are those expectations realistic?)

- If disaggregated data is accessible, did you observe any disproportionately impacted

groups based on race, ethnicity, gender, sexual orientation, socioeconomic status,

age, and other available data points?

- Did the results indicate that groups of students succeeded at different levels?

- Did the results for some groups of students indicate an opportunity gap?

reflect and revise

Reflecting on outcomes data provides an important lens we can use to positively impact student success. Even if minimum achievement levels are met, unless 100% of the students attain proficiency, there is room for improvement. An action plan designed in collaboration with all faculty involved in the specific SLO(s) can be developed to target areas in which students were not successful. Action plans may include:

- providing additional learning experiences (practice) for the students

- refining the curriculum

- revising the SLO and/or semantics of the SLO prompts in an assessment

Action plans may also require additional resources, such as personnel, equipment, time, and additional/different facilities. If budgetary resources are required to implement the action plan, then it may be necessary to request funding through the Program Review process.

It’s also important to celebrate high achievement levels, especially when those are noted following targeted course improvements. Try to specifically identify what instructional practices, assessment tools, etc. were important in enabling more students to meet that outcome and ensure that those valuable aspects are retained in future course iterations.